Creating a Neurofeedback Program With Python

Recently, I’ve been building small projects with Brain-Computer Interfaces (BCI) and wanted to tackle something a little bit bigger. Neurofeedback has been getting more popular nowadays with companies like Muse, and Neurosity using neurofeedback in their BCIs. So I decided to build something related to neurofeedback and checking your relaxation and concentration to have some fun.

The Science

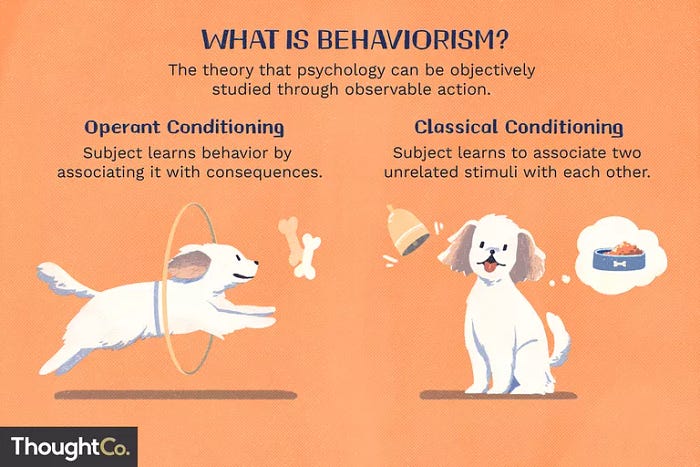

Neurofeedback is a form of biofeedback that leverages developments in BCIs and neuroscience. Our brain's neuroplasticity (our brain's ability to change, grow and learn) allows us to restructure our brain's habits using a learning method called operant conditioning: a technique that involves rewarding good behavior and punishing bad behavior.

The concept of operant conditioning sounds familiar, doesn’t it? Believe it or not, you can train your brain like you would train a dog. Imagine that you are training your dog to roll over, you would need to:

- Get your dog’s favorite treat (reward)

- Make him rollover (action)

- Feed him the treat if he rolls over successfully (rewarding positive actions)

- Rinse and repeat until he can do it by himself. (self-regulation)

Normally in neurofeedback, you would also punish bad behavior… But who would punish a dog? That’s just cruel. However, the rest of the process for training relaxation using neurofeedback is pretty similar to training a dog to roll over:

- A user uses an EEG (type of BCI) to play a brain-controlled game (method to reward and punish).

- The user tries to relax (action).

- The user relaxes and starts to win in the game (rewarding positive actions).

- The user gets agitated and starts to lose in the game (punishing negative actions).

- Rinse and repeat until the user can do it by themselves (self-regulation of brain activity).

Neurofeedback in concept is fairly simple and straightforward, the execution of it is the difficult part. If you want to read more about neurofeedback and the neuroscience behind here, check out my other article.

The Code

To build this project, I used Brainflow, which is a Python module for interacting with biosensors, but mainly BCIs. The documentation is fairly robust with bindings to Python, C++, Java, C#, and support for Julia, Matlab, and R. If you want to start building with BCIs, you should start with Brainflow. Here is the direct link to their documentation.

I used Brainflow-Python to create a live graph of my EEG data as well as analyze it and check if I’m relaxed or concentrated. You can find my code here, and at the bottom of this article where a Github Gist will be posted. Regardless, I’ll be walking you guys through how I built this project and how you can build it yourself.

What you need

- Brainflow compatible BCI (list of supported boards) and related equipment. I used the OpenBCI Ganglion Board for my live test, but when I was building out the program, I used a synthetic board for convenience.

- Python Dependencies

- Numpy

- Pandas

- Brainflow

- Matplotlib

What you do

First, we need to import all of our dependencies

import pandas as pd

import time

from matplotlib import style

from matplotlib.animation import FuncAnimation

import matplotlib.pyplot as plt

import brainflow

import numpy as np

from brainflow.board_shim import BoardShim, BrainFlowInputParams, LogLevels, BoardIds

from brainflow.data_filter import DataFilter, FilterTypes, AggOperations

from brainflow.ml_model import MLModel, BrainFlowMetrics, BrainFlowClassifiers, BrainFlowModelParamsAs explained in the Brainflow docs:

BrainFlow User API has three main modules:

BoardShim to read data from a board, it calls methods from underlying BoardController library

DataFilter to perform signal processing, it calls methods from underlying DataHandler library

MLModel to calculate derivative metrics, it calls methods from underlying MLModule library

We import all 3 modules of Brainflow because we need all of them to collect, filter, and analyze our EEG data.

The other interesting (sub)package that we are importing is the animation function of Matplotlib

from matplotlib.animation import FuncAnimationWhat this function does is essentially repeatedly call our function so that the graph updates live. Afterward, we need to start building out our program.

def main(i):

BoardShim.enable_dev_board_logger()# using synthetic board for demo

params = BrainFlowInputParams()

board_id = BoardIds.SYNTHETIC_BOARD.value

board = BoardShim(board_id, params)

eeg_channels = BoardShim.get_eeg_channels(board_id)

sampling_rate = BoardShim.get_sampling_rate(board_id)

timestamp = BoardShim.get_timestamp_channel(board_id)

The initial part of the function starts with defining some variables for us. First, we create a Brainflow object and define the BCI that we are using (fake synthetic board for testing). Note: the main function takes a parameter because of the matplotlib.animation submodule requires the main function to have a positional argument.

params = BrainFlowInputParams()

board_id = BoardIds.SYNTHETIC_BOARD.value

board = BoardShim(board_id, params)Then we initialize variables that will be useful for us to subset Dataframes and get data later.

eeg_channels = BoardShim.get_eeg_channels(board_id)

sampling_rate = BoardShim.get_sampling_rate(board_id)

timestamp = BoardShim.get_timestamp_channel(board_id)Data Format

Before we go any further with the tutorial, we need to understand Brainflow’s data format. Data from Brainflow comes in a 2D Numpy array which contains all the data coming from the BCI. I call this data, “raw data,” this could include EEG, EMG, Accelerometer, and Timestamp channels.

Methods like:

eeg_channels = BoardShim.get_eeg_channels(board_id)

timestamp = BoardShim.get_timestamp_channel(board_id)Give us the index number of the channel (it’s really more of a row) for each data point. For example, eeg_channels would contain the index number for all the channels containing EEG data. We can then use this to subset the raw data and turn this:

# the ... are due to the high amount of channels and data

[[ 0.00000000e+00 1.00000000e+00 2.00000000e+00 ... 1.26000000e+02

1.27000000e+02 1.28000000e+02]

[ 1.82739956e+00 3.66747424e+00 5.15489849e+00 ... -3.52875167e+00

-5.23466887e+00 -7.10037698e+00]

[ 8.85772805e+00 1.47308226e+01 1.86436204e+01 ... 1.35753732e+01

1.86502215e+01 2.52375351e+01]

...

[ 1.27063761e+03 1.08653285e+03 1.22228982e+03 ... 9.45783959e+02

9.30405288e+02 9.25080058e+02]

[ 9.50000000e+01 9.50000000e+01 9.50000000e+01 ... 9.50000000e+01

9.50000000e+01 9.50000000e+01]

[ 1.61905649e+09 1.61905649e+09 1.61905649e+09 ... 1.61905649e+09

1.61905649e+09 1.61905649e+09]]into this:

#raw data subsetted with eeg_channels. notice how all data looks similar and there's less of it.

[[ 1.77435186 3.67017251 4.95847233 ... -6.87407484

-7.98488819 -9.88828034]

[ 9.0846473 15.9683001 19.27453404 ... 25.81676295

29.75789509 25.50070406]

[ 19.07741529 27.72634504 35.31312953 ... -42.85784908

-36.33519987 -29.54014251]

...

[ 117.30807714 -115.46988706 -113.57079944 ... 249.48141363

-4.72746065 -276.57398033]

[ 153.63433872 -331.41590935 22.17759501 ... -169.70049515

202.9771611 176.43798055]

[ 143.8791394 -297.01111573 36.82472364 ... 88.29982729

-315.03149869 90.70746399]]Now that you understand how subsetting data works, let’s get back into the tutorial!

Back to the tutorial

board.prepare_session()

board.start_stream()

style.use('fivethirtyeight')

plt.title("Live EEG stream from Brainflow", fontsize=15)

plt.ylabel("Data in millivolts", fontsize=15)

plt.xlabel("\nTime", fontsize=10)

keep_alive = True eeg1 = [] #lists to store eeg data

eeg2 = []

eeg3 = []

eeg4 = []

timex = [] #list to store timestampwhile keep_alive == True: while board.get_board_data_count() < 250:

time.sleep(0.005)

data = board.get_current_board_data(250) eegdf = pd.DataFrame(np.transpose(data[eeg_channels]))

eegdf_col_names = ["ch1", "ch2", "ch3", "ch4"]

eegdf.columns = eegdf_col_names

timedf = pd.DataFrame(np.transpose(data[timestamp]))

This is a lot of code, but it’s fairly simple to understand when you start reading the code and the logic behind it.

board.prepare_session()

board.start_stream()

style.use('fivethirtyeight')

plt.title("Live EEG stream from Brainflow", fontsize=15)

plt.ylabel("Data in millivolts", fontsize=15)

plt.xlabel("\nTime", fontsize=10)

keep_alive = Trueeeg1 = [] #lists to store eeg data

eeg2 = []

eeg3 = []

eeg4 = []

timex = [] #list to store timestamp

Here, we start with creating our graph and style it. Afterward, we initialize a variable for our while loop so that we can reset the stream and plot the data.

while keep_alive == True:while board.get_board_data_count() < 250:

time.sleep(0.005)

data = board.get_current_board_data(250) eegdf = pd.DataFrame(np.transpose(data[eeg_channels]))

eegdf_col_names = ["ch1", "ch2", "ch3", "ch4"]

eegdf.columns = eegdf_col_names

timedf = pd.DataFrame(np.transpose(data[timestamp]))

This part’s pretty much a walk in the park. We wait for 250 samples of data so that the shape of our data stays constant. Next, we take our data and turn them into DataFrames by subsetting our data and transposing the array.

The Signal Processing

#this is inside the while look

for count, channel in enumerate(eeg_channels):

# filters work in-place

# Check Brainflow docs for more filters

if count == 0:

DataFilter.perform_bandstop(data[channel], sampling_rate, 60.0, 4.0, 4,

FilterTypes.BUTTERWORTH.value, 0) # bandstop 58 - 62

DataFilter.perform_bandpass(data[channel], sampling_rate, 21.0, 20.0, 4,

FilterTypes.BESSEL.value, 0) # bandpass 11 - 31

if count == 1:

DataFilter.perform_bandstop(data[channel], sampling_rate, 60.0, 4.0, 4,

FilterTypes.BUTTERWORTH.value, 0) # bandstop 58 - 62

DataFilter.perform_bandpass(data[channel], sampling_rate, 21.0, 20.0, 4,

FilterTypes.BESSEL.value, 0) # bandpass 11 - 31

if count == 2:

DataFilter.perform_bandstop(data[channel], sampling_rate, 60.0, 4.0, 4,

FilterTypes.BUTTERWORTH.value, 0) # bandstop 58 - 62

DataFilter.perform_bandpass(data[channel], sampling_rate, 21.0, 20.0, 4,

FilterTypes.BESSEL.value, 0) # bandpass 11 - 31

if count == 3:

DataFilter.perform_bandstop(data[channel], sampling_rate, 60.0, 4.0, 4,

FilterTypes.BUTTERWORTH.value, 0) # bandstop 58 - 62

DataFilter.perform_bandpass(data[channel], sampling_rate, 21.0, 20.0, 4,

FilterTypes.BESSEL.value, 0) # bandpass 11 - 31This code snippet is where all the signal processing happens. Essentially, we loop through all the EEG channels in our data and apply filters to each channel. If you are applying the same filters to each EEG channel, you could just loop through the channels normally without using enumerate and count. NOTE: The formatting is funky in Medium, the code is much clearer in the Github repository.

The Machine Learning

# Brainflow ML Model

bands = DataFilter.get_avg_band_powers(data, eeg_channels, sampling_rate, True)

feature_vector = np.concatenate((bands[0], bands[1]))# calc concentration

concentration_params = BrainFlowModelParams(BrainFlowMetrics.CONCENTRATION.value, BrainFlowClassifiers.KNN.value)

concentration = MLModel(concentration_params)

concentration.prepare()

print('Concentration: %f' % concentration.predict(feature_vector))

concentrated_measure = concentration.predict(feature_vector)

concentration.release()# calc relaxation

relaxation_params = BrainFlowModelParams(BrainFlowMetrics.RELAXATION.value, BrainFlowClassifiers.KNN.value)

relaxation = MLModel(relaxation_params)

relaxation.prepare()

print('Relaxation: %f' % relaxation.predict(feature_vector))

relaxed_measure = relaxation.predict(feature_vector)

relaxation.release()

Brainflow comes with an ML model already trained for you. The downside is that it only has two metrics that it can analyze but other than that it’s great for a proof of concept. We are trying to analyze neural oscillations (referred to as bands) to predict if you are relaxed or concentrated.

The program starts off by getting your average band powers and stitches your delta and theta wave data together in feature_vector. Afterward, we initialize the classifier model with our preferred metric and classifier algorithm and run it to calculate each metric. It then prints the calculation.

The Neurofeedba- wait seriously?

if concentrated_measure >= 0.5:

print("GOOD KEEP CONCENTRATING")

else:

print("WHERE IS THE CONCENTRATION??")

if relaxed_measure >= 0.5:

print("YES RELAX MORE")

else:

print("NO, START RELAXING")This is the “neurofeedback” portion of my program. I thought it would be funny if I had a program yelling at you to relax, so I made it. The print statements are just to explain the concept of neurofeedback, normally you would play a game or something similar.

The Plotting

#appending eeg data to lists

eeg1.extend(eegdf.iloc[:, 0].values)

eeg2.extend(eegdf.iloc[:, 1].values)

eeg3.extend(eegdf.iloc[:, 2].values)

eeg4.extend(eegdf.iloc[:, 3].values)

timex.extend(timedf.iloc[:, 0].values) # timestampsplt.cla()

#plotting eeg data

plt.plot(timex, eeg1, label="Channel 1", color="red")

plt.plot(timex, eeg2, label="Channel 2", color="blue")

plt.plot(timex, eeg3, label="Channel 3", color="orange")

plt.plot(timex, eeg4, label="Channel 4", color="purple")

plt.tight_layout()

keep_alive = False #resetting stream so that matplotlib can plot data

We’re in the final stretch! All we do here is extract the values in the EEG DataFrames (and the timestamp DataFrame) and append them to our lists that we created earlier. Then we just plot the data in the lists and we’re almost done!

The Execution

I did say almost, but to be fair, we are basically done. All we gotta do is call the function using FuncAnimation from the Matplotlib.animation submodule.

ani = FuncAnimation(plt.gcf(), main, interval=1000)

plt.tight_layout()

plt.autoscale(enable=True, axis="y", tight=True)

plt.show()Boom, it’s done. Now we have a fully functioning neurofeedback program with a live graph.

NOTE: The interval is in milliseconds, so 1000 milliseconds would be a second. For a more in-depth tutorial on Matplotlib.animation, check out Corey Schafer’s video on it.

The Ending

Although sad, this is the end of this tutorial. I hope you learned something after reading, and go out and build something of your own. If you do, don’t hesitate to contact me! Here is the Github repo, and the Gist as promised.

Hey! If you made it all the way down here I just want to say thank you for reading! If you enjoyed the article, clap it up and contact me on Twitter, Linkedin, or my email ahnaafk@gmail.com. Check out my website or subscribe to my newsletter while you’re at it.